GStreamer motion detection

Overview

GStreamer motion detection trigger without H.264 stream decoding!

- High efficiency and ability to process the large number of streams simultaneously

- H.264 input data format

- Support the H.264 (02/14) standard and earlier

- The adaptive threshold of sensitivity

Application

Surveillance systems (In cases when IP cameras don't have embedded motion detector or when the server is unable to use it or its use is hampered).

Preliminary chains of motion detection in analysis tasks.

Project description

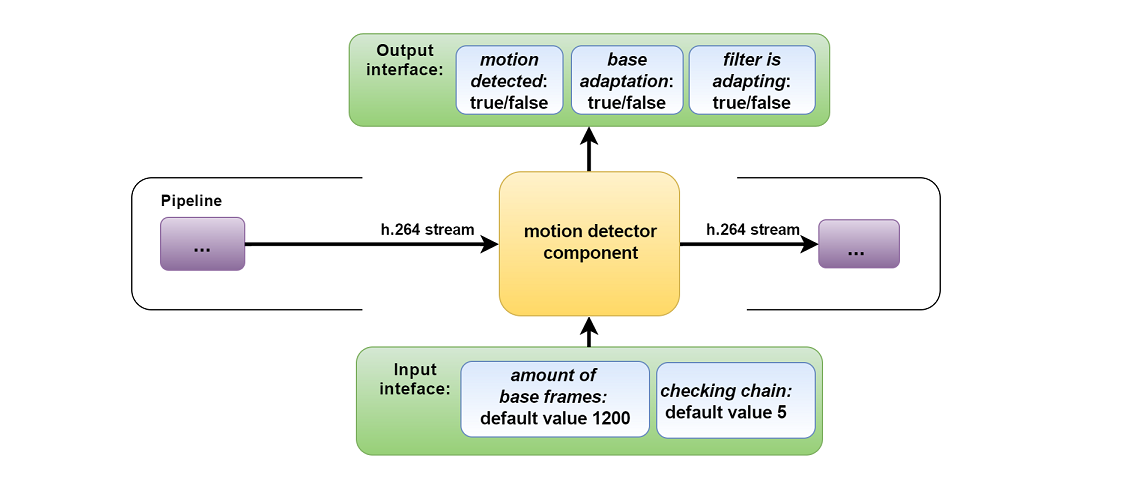

Developed by us GStreamer component to detect motion fact has the main feature - it works without preliminary decoding of H.264 stream. Implemented for version GStreamer 1.0 and its work is based on analysis of packed headers NALU (Network Abstraction Layer Unit), thus, performs only the search of motion fact with ability to exercise the further analysis. This is the only disadvantage of component.

Analysis of frames header allows with high possibility to detect motion in frame and without decoding frames for processing. Preliminary tests on PC with processor IntelCore Duo 2.66GHz showed that when switching the component in test chain of rtspsrc, rtph264depay, avdec_h264, autovideosink, component consumes CPU by 0,6% and 10.6MB of memory. This is incomparably less of case with stream decoding.

This filter is adaptive, i.e. builds the mathematical model with ability to modify the sensitivity threshold at change of external parameters such as illumination. To construct the initial variation model of coefficient network packet (the buffer size is identified by the managing variable base frames). The simulated data are compared with obtained coefficients from NALU. With the availability of motion, H.264 coder creates new motion vectors, which will lead to changes of coefficients, laid in formed packet, and to the data mismatch from model and obtained of NALU.

Changing of general recording conditions in frame such as shadows, lightning, the change of camera position will lead to false detection of motion. it’s necessary to distinguish real motion and plain change of lighting or light noise. With this effect enables to fight secondary filter, which analyzes the sections of video sequence with 1 sec. shot ( the number of segments for analysis is determined by managing variable checking chain). Secondary filter is built in such way that the procedure of updating the buffer data will be resumed if recording conditions are stable. For example, the camera has changed its position, consequently, changed the shooting scene and its illumination, but there is no motion in frame. Such situation would be lead to a permanent mismatch model and receivable data. Secondary filter on base of segments will unlock the adaption, reducing mismatch. That allows to continue work on detection of motion in new stage.